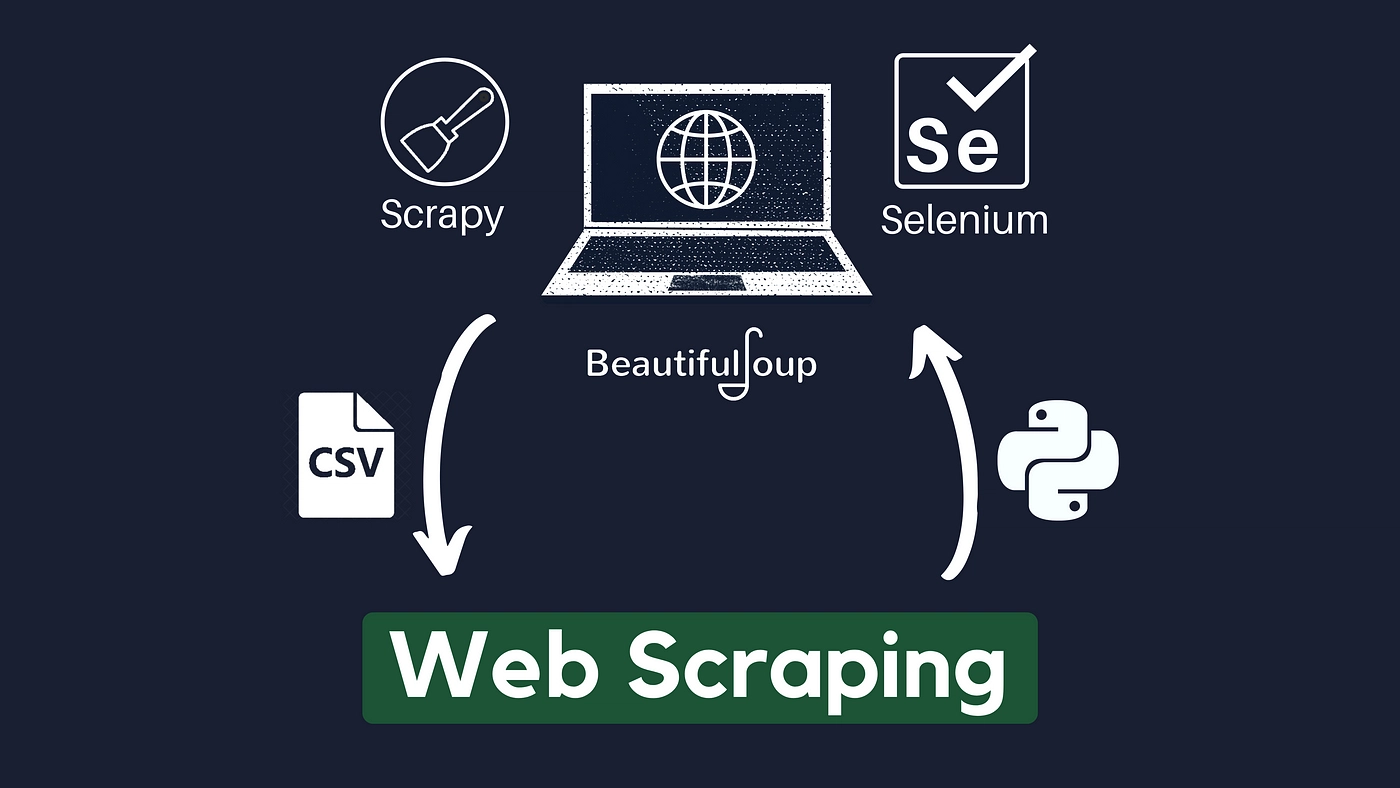

Website scraping is actually a significant technique to get details may, presenting the right way to get hold of real-time details to get study, establishing datasets, plus enriching product knowing styles. If that you are meeting supplement information and facts, HTML to PDF API investigating shopper assessments, and also administering economical details, enable you to resourcefully clean website details is undoubtedly an indispensable resource inside of a details scientist’s toolkit. Having said that, flourishing website scraping runs above very simple extraction—it necessitates organize procedures to guarantee the details is definitely cleanse, built, plus geared up to get study. Here, we’re going to take a look at some of the best website scraping procedures that should boost your details group campaigns plus supercharge a person’s analytical capacities.

- Understanding the concepts of CSS Selectors to get Highly accurate Details Removal

One of the robust procedures around website scraping is definitely working with CSS selectors to focus on precise HTML features. Your CSS selector is actually a cord with character types familiar with establish features for a webpage based upon its properties just like training, id, and also ticket term. This lets details may so that you can precisly plant prefer to they have and not having to yourself sort through dirty Html code.

Such as, when you are scraping supplement sale listings out of a strong e-commerce webpage, you can utilize CSS selectors to focus on this product term, amount, plus criteria without the need of like unrelated details for instance map-reading menus and also footers. Your local library for instance BeautifulSoup around Python cause it to user-friendly and uncomplicated CSS selectors so that you can parse a HTML plus plant exclusively necessary details, which will will help improve a scraping approach plus helps ensure you actually keep away from avoidable features.

Model:

python

Clone computer code

out of bs4 signific BeautifulSoup

signific tickets

Give HTTP require to achieve the website subject material

web link = “https: //example. com/products”

effect = tickets. get(url)

soups = BeautifulSoup(response. written text, “html. parser”)

Apply CSS selectors so that you can plant supplement companies plus selling prices

products and solutions = soups. select(‘. product-name’)

selling prices = soups. select(‘. product-price’)

to get supplement, amount around zip(products, prices):

print(f”Product: product.text, Amount: price.text “)

couple of. Taking on Potent Content with Selenium

Present day web pages normally apply JavaScript so that you can dynamically download subject material following on from the 1st website download, which may generate common scraping gear for instance BeautifulSoup and also Tickets worthless. This is certainly mainly normal with web pages this use incalculable scrolling, AJAX tickets, and also interactive features. To touch these potent subject material, Selenium is undoubtedly an critical resource.

Selenium is actually a technique automation resource this helps you duplicate serious customer tendencies, just like clicking on buttons, scrolling by web sites, and also watching for features so that you can download. By way of maintaining the proper browser (like Internet explorer and also Firefox), Selenium helps you to clean subject material that is definitely performed dynamically, defining it as ideal for web pages this closely trust in JavaScript.

Model:

python

Clone computer code

out of selenium signific webdriver

out of selenium. webdriver. prevalent. by way of signific By way of

signific time frame

Initialize a WebDriver

operater = webdriver. Chrome()

Demand webpage

operater. get(‘https: //example. com/dynamic-content’)

Bide time until features so that you can download

time frame. sleep(5)

Plant potent subject material just after it is really performed

dynamic_content = operater. find_elements(By. CLASS_NAME, ‘dynamic-class’)

to get subject material around dynamic_content:

print(content. text)

Nearby a technique

operater. quit()

- Resourcefully Navigating By Pagination

Lots of web pages offer details through various web sites, just like search engine optimization and also supplement catalogues. To build up many of the details, you must resourcefully tackle pagination—the steps involved in navigating by various web sites with subject material. Or completed accurately, scraping paginated subject material bring about rudimentary datasets and also bad scraping.

A person helpful solution will be to establish a WEB LINK sample made use of by a website’s pagination procedure. By way of example, web pages normally switch a website selection while in the WEB LINK (e. f., page=1, page=2, etcetera. ). You will be able programmatically steer by all these web sites, removing details out of each of them inside of a picture.

Model:

python

Clone computer code

signific tickets

out of bs4 signific BeautifulSoup

base_url = “https: //example. com/products? page=”

to get website around range(1, 6): # Clean the earliest 5 web sites

web link = f”base_url page inches

effect = tickets. get(url)

soups = BeautifulSoup(response. written text, “html. parser”)

Plant details out of each one website

products and solutions = soups. select(‘. product-name’)

to get supplement around products and solutions:

print(product. text)

five. Taking on AJAX plus API Tickets

Website scraping seriously isn’t limited to removing details out of HTML only. Lots of present day web pages download details asynchronously working with AJAX (Asynchronous JavaScript plus XML) calling and also backend APIs. All these tickets can be used to download details dynamically without the need of fresh new all the website, building these folks a beneficial reference to get built details.

For a details scientist, you may establish all these AJAX tickets by way of checking a multi-level hobby in the browser’s construtor gear. One time revealed, you may detour around the need to clean a HTML plus instantly obtain a base API endpoints. Using these services presents tidier, extra built details, defining it as quicker to approach plus investigate.

Model:

python

Clone computer code

signific tickets

WEB LINK of your API endpoint

api_url = ‘https: //example. com/api/products’

Give your HAVE require to your API

effect = tickets. get(api_url)

Parse a made a comeback JSON details

details = effect. json()

Plant plus demonstrate supplement points

to get supplement around data[‘products’]:

print(f”Product: product[‘name’], Amount: product[‘price’] “)

- Using Amount Confining plus Well intentioned Scraping

If scraping massive lists with details, it’s essential for use amount confining to protect yourself from overloading your website’s server plus finding hindered. Website scraping might decide to put a vital download for a webpage, particularly various tickets are built around very little time. Hence, contributing delays amongst tickets plus improving a website’s automated trading programs. txt data is not only a strong lawful apply and the right way to be sure a person’s IP doesn’t have banished.

In combination with improving automated trading programs. txt, details may should be thinking about working with gear for instance scrapy that come with built-in amount confining benefits. By way of setting up best suited hesitate moments amongst tickets, you actually keep your scraper reacts similar to a ordinary customer plus would not cut off a website’s experditions.

Model:

python

Clone computer code

signific time frame

signific tickets

web link = “https: //example. com/products”

to get website around range(1, 6):

effect = tickets. get(f”url? page=page “)

Duplicate human-like hesitate

time frame. sleep(2) # 2-second hesitate amongst tickets

print(response. text)

- Details Cleaning up plus Structuring to get Study

Should the details is scraped, our next significant measure is definitely details cleaning up plus structuring. Live details yanked on the internet is sometimes dirty, unstructured, and also rudimentary, so that it tricky to assess. Details may will have to improve the following unstructured details to a cleanse, built arrangement, maybe a Pandas DataFrame, to get study.

While in the details cleaning up approach, projects just like taking on losing principles, normalizing written text, plus remodeling particular date models essential. On top of that, should the details is definitely flushed, details may might save the feedback around built models for instance CSV, JSON, and also SQL data bank to get a lot easier collection and additional study.

Model:

python

Clone computer code

signific pandas when pd

Generate a DataFrame with the scraped details

details = ‘Product’: [‘Product A’, ‘Product B’, ‘Product C’],

‘Price’: [19.99, 29.99, 39.99]

df = pd. DataFrame(data)

Cleanse details (e. f., clear away unnecessary character types, transfer types)

df[‘Price’] = df[‘Price’]. astype(float)

Help you save flushed details so that you can CSV

df. to_csv(‘products. csv’, index=False)

Final result

Website scraping is actually a robust technique to get details may to build up plus make the most of details on the internet. By way of understanding the concepts of procedures for instance CSS selectors, taking on potent content with Selenium, dealing with pagination, plus handling APIs, details may might obtain high-quality details to get study. On top of that, improving amount confines, cleaning up, plus structuring a scraped details makes the feedback is definitely geared up to get further more producing plus modeling. By employing all these procedures, you’ll be capable to improve a person’s website scraping approach plus greatly enhance your details study capabilities, causing you to more streamlined plus helpful in the data-driven plans.